Certified Kubernetes Administrator

01 October, 2022

Resources

- Articles

- Videos

- Cheatsheet

- https://github.com/StenlyTU/K8s-training-official

- https://github.com/leandrocostam/cka-preparation-guide

- https://rx-m.com/cka-online-training/

- https://github.com/walidshaari/Kubernetes-Certified-Administrator

- https://github.com/alijahnas/CKA-practice-exercises

- https://gist.github.com/texasdave2/8f4ce19a467180b6e3a02d7be0c765e7

Notes

Features of Kubernetes

- Container Orchestration

- Application Reliability

- Automation

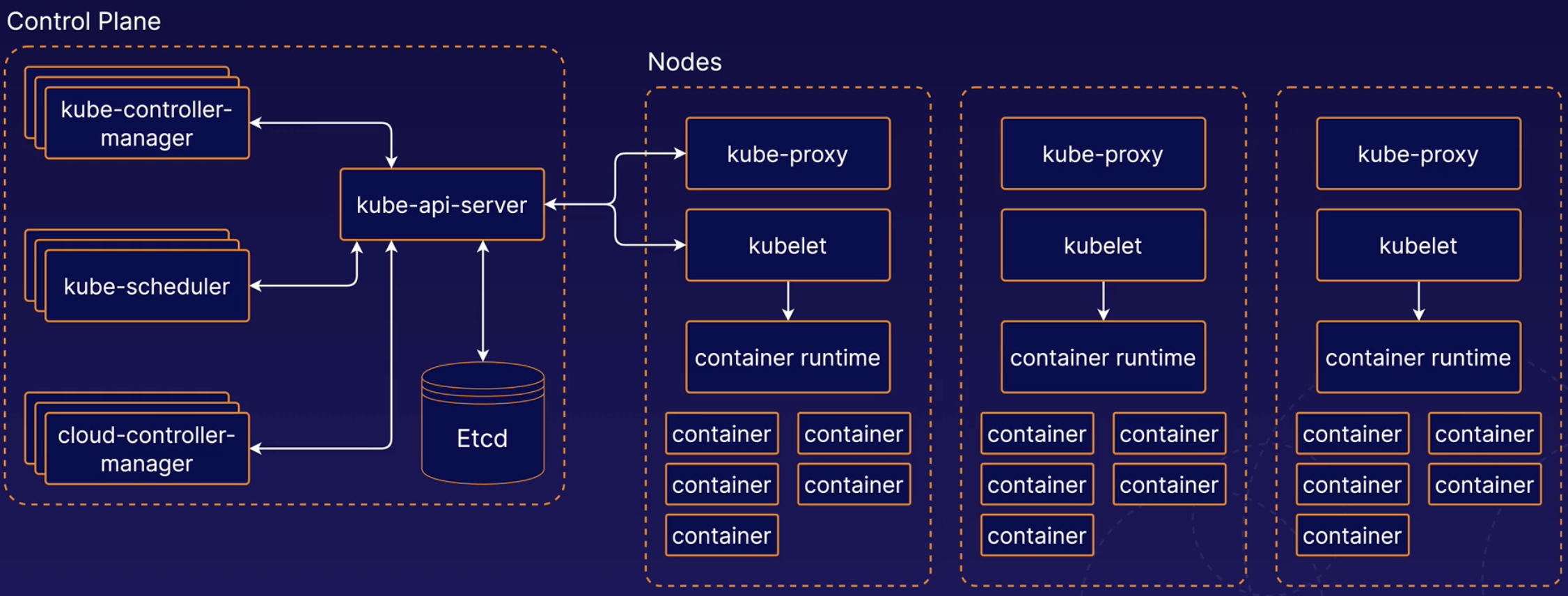

Architecture

- Control Plan

- Runs the cluster

- Was earlier referred to Master

- Components

- kube-api-server: Provides the k8s api

- ETCD: Backend data storage for the Kuberentes cluster

- kube-scheduler: Handles scheduling the process of selecting an available node in the cluster on which to run containers

- kube-controller-manager: Runs a collection of multiple controller utilities in a single process

- cloud-controller-manager: Provides an interface between Kubernetes and various cloud platforms. Useful when using Cloud providers like AWS, GCP, Azure, etc.

- Nodes

- kubelet: Agent that runs on every node. Communicates with the ControlPlane and ensures that containers are run on its node as instructed. Also handles the process of reporting container status and other data about containers back to the control plane.

- container runtime: Not built into Kubernetes. It is a separate piece of software that is responsible for running containers on the machine. Examples would be docker, containerd.

- kube-proxy: Is a network proxy. Runs of each node and it handles some tasks related to providing networking between containers and services in the cluster.

- Control Plan

Building a Kubernetes Cluster

Prerequisites:

- 3 Servers: 1 Control Plane, 2 Nodes

Set the hostnames

sudo hostnamectl set-hostname k8s-controlsudo hostnamectl set-hostname k8s-worker-1

Set up mapping between servers

- Edit

/etc/hosts

PRIVATEIP k8s-control PRIVATEIP k8s-worker1 PRIVATEIP k8s-worker2- Edit

Restart all the servers or logout/login. You should see the updated hostnames

Installing containerd

- Enabling required modules

cat << EOF | sudo tee /etc/modules-load.d/containerd.conf overlay br_netfilter EOF- Enable the modules

sudo modprobe overlaysudo modprobe br_netfilter

Configuration that Kubernetes needs for networking

cat <<EOF | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-ip6tables = 1 EOF- Apply the configurations:

sudo sysctl --system

- Apply the configurations:

Install containerd

sudo apt-get update && sudo apt-get install -y containerd

containerd configuration file

sudo mkdir -p /etc/containerdsudo containerd config default | sudo tee /etc/containerd/config.tomlsudo systemctl restart containerd

Installing Kubernetes packages

sudo swapoff -a(disable swap)sudo apt-get update && sudo apt-get install -y apt-transport-https curl(install k8s required packages)sudo curl -fsSLo /usr/share/keyrings/kubernetes-archive-keyring.gpg https://packages.cloud.google.com/apt/doc/apt-key.gpg(install gpg keys)

# add update the package repository echo "deb [signed-by=/usr/share/keyrings/kubernetes-archive-keyring.gpg] https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.listsudo apt update && sudo apt-get install -y kubelet=1.24.0-00 kubeadm=1.124.0-00 kubectl=1.24.0-00(install kubernetes packages)sudo apt-mark hold kubelet kubeadm kubectl(hold packages to prevent updation)

Repeat the same process for worker nodes

On Control Plane server

sudo kubeadm init --pod-network-cidr 192.168.0.0/16 --kubernetes-version 1.24.0(initialise the kubernetes cluster)- Setup kubeconfig

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes//admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config kubectl get nodes- Install Calico

kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml - Join worker nodes to the cluster

kubeadm token create --print-join-command - Run the command generate from various command on worker nodes as a root user

- Check if all nodes are connected using

kubectl get nodes

Namespaces

- Virtual clusters backed by the same physical cluster. Objects such as pods and containers live in namespaces

- Get namespaces:

kubectl get namespaces - Create namespace:

kubectl create namespace my-namespace - Access objects in namepsace:

kubectl -n my-namespace get pods - Access object across all namespaces:

kubectl -A get pods

High availability in Kubernetes

- For HA K8s cluster, we need Multiple control plane nodes.

- You access the multi node control plane via a load balancer. Kubelet running on worker nodes would reach to the load balancer instead of directly connecting with the control plane

- Stacked etcd

- Each control plane node runs it’s own etcd instance

- External etcd

- Runs outside the control plane

- There can be as many etcd instances as we want

Kubernetes Management tools

- kubectl: official CLI interface to access and work with Kubernetes cluster

- kubeadm: official tool for creating kubernetes clusters

- minikube: set up local single node kubernetes cluster

- Helm: templating and package management for kubernetes objects

- Kompose: Converts docker-compose files into Kubernetes objects

- Kustomize: configuration management tool for managing kubernetes object configurations.

Safely draining a kubernetes node

- A node is removed from service when performing maintenance. Containers running on the node will be gracefully terminated and potentially rescheduled on another node

kubectl drain <node name> -—ignore-daemonsetsdrain node while ignoring daemonsetkubectl uncordon <node name>to allow pods to be scheduled on the node, we need to uncordon it

- A node is removed from service when performing maintenance. Containers running on the node will be gracefully terminated and potentially rescheduled on another node

Upgrading K8s using kubeadm

- Control Plane

kubectl drain k8s-control —-ignore-daemonsetssudo apt-get update && sudo apt-get install -y —allow-change-held-packages kubeadm=1.22.2-00kubeadm versionsudo kubeadm upgrade plan v1.22.2sudo kubeadm upgrade apply v1.22.2sudo apt-get update && sudo apt-get install -y —-allow-change-held-packages kubelet=1.22.2-00 kubectl=1.22.2-00sudo systemctl daemon-reloadsudo systemctl restart kubeletkubectl uncordon K8s-controlkubectl get nodes

- Worker Nodes

kubectl drain k8s-worker —-ignore-daemonsets —-forcesudo kubeadm upgrade nodesudo apt-get update && sudo apt-get install -y —allow-change-held-packages kubelet=1.22.2-00 kubectl=1.22.2-00sudo systemctl daemon-reloadsudo systemctl restart kubelet- On control plane,

kubectl uncordon K8s-worker

- Control Plane

Backing Up and Restoring etcd Cluster Data

- If etcd backup is lost we will need to rebuild the cluster from scratch

- To backup

ETCDCTL_API=3 etcdctl —-endpoints <endpoint> --cacert=<cert path> --cert=<cert path> --key=<keypath> snapshot save <file name> - To restore

ETCDCTL_API=3 etcdctl snapshot restore <file name> --initial-cluster <> --initial-advertise-peer-urls <> --name <> --data-dir <>

kubectl

- CLI tool that allows us to interact with Kubernetes. Use the Kubernetes API

getto list objects in Kubernetes clusterkubectl get <object-type> <object-name> -o <output>describeto get detailed information about kubernetes objectkubectl describe <object type> <object name>createto create objectskubectl create -f <file name>applysimilar tocreatewill update existing object in case it already exists otherwise creates a new objectdeleteto delete objects from the clusterkubectl delete <object-type> <object-name>execto run commands inside the clusterkubectl exec <pod name> -c <container name> —- <command>- Tips

- Use imperative commands

kubectl create deployment <deployment name> -—image=nginx —replicas=2 - Yaml from imperative commands

kubectl create deployment <deployment name> -—image=nginx -—dry-run=client -o yaml - Record a command

kubectl scale deployment <deployment name> —-replicas=5 —record

- Use imperative commands

Managing K8s Role-Based access control (RBAC)

- Allows to control what user are allowed to do and access within the cluster

- Roles/ClusterRoles: Define set of permissions. Determine what users can do in the cluster. Role defines permissions within a particular namespace and ClusterRole defines cluster-wide permissions not specific to a single namespace

# role.yml apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: namespace: default name: pod-reader rules: - apiGroups: [""] resources: ["pods", "pods/log"] verbs: ["get", "watch", "list"] # kubectl apply -f role.yml# rolebinding.yml apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: pod-reader namespace: default subjects: - kind: User name: dev apiGroup: rbac.authorization.k8s.io roleRef: kind: Role name: pod-reader apiGroup: rbac.authorization.k8s.io # kubectl apply -f rolebinding.ymlService Account

- Account used by container processes within Pods to authenticate with K8s API.

- Service Account can be created using Yaml or imperative commands

apiVersion: v1 kind: ServiceAccount metadata: name: my-serviceaccout # kubectl create sa my-serviceaccount- Access control for service account can be managed using RBAC. Bind service accounts with RoleBindings or ClusterRoleBindings

apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: sa-pod-reader subjects: - kind: ServiceAccount name: my-serviceaccount namespace: default roleRef: kind: Role name: pod-reader apiGroup: rbac.authorization.k8s.ioInspecting Pod Resource Usage

- In order to view metrics about the resources pod and nodes are using we need to install the Kubernetes Metrics server

- We can view the data using

kubectl top <pod | node> —-selector <selector>

Managing application configuration

- Allows to pass dynamic values to applications at runtime to control behaviour

- Can be done via ConfigMaps

apiVersion: v1 kind: ConfigMap metadata: name: my-configmap data: key1: value1 key2: value2 key3: subkey: morekeys: data evenmore: some more data key4: | multi line data- Can be done via Secrets

apiVersion: v1 kind: Secret metadata: name: my-secret type: Opaque data: username: user password: mypass- ConfigMaps or Secrets can be attached to containers as Environment Variables

spec: containers: - ... env: - name: ENVVAR valueFrom: configMapKeyRef: name: my-configmap key: mykey- ConfigMaps or Secrets can be mounted as volumes. Top level Data keys will appear as files

volumes: - name: secret-vol secret: secretName: my-secret containers: - name: my-container image: nginx:alpine volumeMounts: - name: secret-vol mountPath: /opt/Resource Requests

- Request: Allows us to define CPU and memory limits to containers. Kubernetes scheduler uses these limits to identify which node to schedule the Pods on.

- Limit: Enforce these limits. Some runtimes might stop the container if the limit is hit

- In case requesting too many resources, the Pod will never get scheduled

apiVersion: v1 kind: Pod metadata: name: my-pod spec: containers: - name: busybox image: busybox resources: requests: cpu: "250m" memory: "128Mi" limits: cpu: "500m" memory: "256Mi"Monitoring Container Health with Probes

- Helps validate whether our applications are working as expected.

- Liveness Probes: allow you to automatically determine whether or not container application is in a healthy state.

- Startup Probes: similar to liveness probes, this probe only runs at the time of container startup

- Readiness Probes: validates whether a container is ready to accept requests. Like startup probes, readiness probe runs only on startup.

# liveness livenessProbe: exec: command: - echo - "hello world" initialDelaySeconds: 5 # delay before starting periodSeconds: 5 # delay between each execution livenessProbe: httpGet: path: / port: 80 initialDelaySeconds: 5 # delay before starting periodSeconds: 5 # delay between each execution # startup startupProbe: httpGet: path: / port: 80 failureThreshold: 30 periodSeconds: 5 # delay between each execution # readiness readinessProbe: httpGet: path: / port: 80 intialDelaySeconds: 5 periodSeconds: 5 # delay between each executionPod Restart Policies

- Restart policy allows us to customise the behaviour when a pod fails (restart or not)

- Always: Default policy, containers will always restart if they fail/stop.

- OnFailure: only restart the pod if the container process exists with an error code or the container is determined unhealthy by a liveness probe

- Never: Opposite of Always, pod will never be restarted

apiVersion: v1 kind: Pod metadata: name: restart-policy-pod spec: restartPolicy: Always (OnFailure, Never) containers: - name: restart-pod image: nginx:alpine command: - echo "restart" - sleep 10 - exit 1Multi-Containers Pods

- Pod running multiple containers. Resources are shared. Ideally there should be one containers per pod

- Cross-container interaction: Containers share same network and are able to communicate with one another on any port. Containers can also share volumes

- Sidecar: Reads the log file from a shared volume and prints it to the console so the log output will appear in the container log

apiVersion: v1 kind: Pod metadata: name: multi-container spec: containers: - name: nginx image: nginx:alpine - name: redis image: redis:alpine - name: couchbase image: couchbaseInit containers

- containers that run once during the startup process of a pod.

- Init containers are run in a sequential order

apiVersion: v1 kind: Pod metadata: name: init-containers spec: containers: - name: nginx image: nginx:alpine initContainers: - name: delay image: busybox command: - sleep - "100"Kubernetes Scheduling

- Process of assigning Pods to Nodes so kubelet can run them

- Considerations:

- resources requests

- node labels, taints, affinity

- nodeSelector: Added to pod to define which node to schedule on

apiVersion: v1 kind: Pod metadata: name: nodeSelector spec: nodeSelector: app: nginx # will be scheduled on node with label containers: - name: nginx image: nginx:alpine- nodeName: Schedule Pod on a node with a specific name

apiVersion: v1 kind: Pod metadata: name: nodeName spec: nodeName: k8s-worker1 containers: - name: nginx image: nginx:alpineDaemonSets

- Automatically runs a copy of a Pod on each node. Runs the same Pod on newly added nodes as well

apiVersion: apps/v1 kind: DaemonSet metadata: name: my-daemonset spec: selector: matchLabels: app: my-app template: metadata: labels: app: my-app spec: containers: - name: nginx image: nginx:alpineStatic Pods

- A pod that is managed directly by the kueblet on a node and not by the K8s api.

- Mirror pods: kubelet creates a mirror pod for each static pod. Mirror pods allow you to see the status of the static pod via the k8s api but we can’t change or manage them via the API

- Default static pod location

/etc/kubernetes/manifests

# /etc/kubernetes/manifests/my-static-pod.yml apiVersion: v1 kind: Pod metadata: name: static-pod spec: containers: - name: nginx image: nginx:alpine- kubelet will automatically check the directory for yaml file and create the pod

- The pod can be checked via k8s api, however, its a mirror pod and it can be deleted.

Deployment

Object that define a desired state for a ReplicaSet. It will maintain the desired state by creating, deleting and replacing Pods with new configurations

replicas: number of replica Pods the deployment will seek to maintain

selector: label selector used to identify the replica Pods managed by the Deployment

template: Pod definition used to create the Pod replicas

Use cases:

- easily scale pods up and down

- perform rolling updates to deploy a new software version

- roll back to a previous version of the software

apiVersion: apps/v1 kind: Deployment metadata: name: my-deployment spec: replicas: 3 selector: matchLabels app: node template: metadata: labels: app: node spec: containers: - name: node image: node:alpine command: - sleep - "1000" # k get deploy,podsScaling deployment

- dedicating more or fewer resources to an application by added or removing pods.

- We can either edit the existing deployment to update the number of replicas or use the imperative command

k edit deploy <deployment name> # then change the `replicas` amount k scale deploy <deployment name> --replicas=<number of replicas>Rolling Updates

- Rolling updates allow you to make changes to a deployment’s Pods at controlled rate, gradually replacing old with new pods. This allows updates without downtime.

- Rollback allows us to move back to previous state

... strategy: type: RollingUpdate RollingUpdate: maxSurge: 25% maxUnavailable: 10%- check rollout status

kubectl rollout status deploy <deployment name> - check rollout history

kubectl rollout hitstory deploy <deployment name> - undo rollout

kubectl rollout undo deploy <deployment name>

Networking

- Kubernetes network model is a set of standards that define how networking between Pods behaves. There are different implementations of this model including the Calico network plugin.

- Each pod has its own unique IP address within the cluster

- CNI Plug-ins:

- Type of Kubernetes network plugin. Provide network connectivity between pods

- Check K8s documentation to understand which plugin to use.

- Calico is good default option

- DNS in K8s

- K8s virtual network uses a DNS to allow Pods to locate other Pods and services using domain name instead of IP address.

- DNS runs as a service within the cluster under the kube-system namespace. kubeadm clusters use CoreDNS

- Pod domain name looks like

pod-up-address.namespace-name.pod.cluster.local

- Network Policies

- Object to control the flow of network to/from Pods allowing to build a secure cluster network by keeping pods isolated from traffic they do not need.

- podSelector: Determines which Pod in the namespace the Network Policy applies to. By default traffic is allowed to and from all Pods

- Ingress: Incoming traffic coming to the pod. Use from Selector to define incoming traffic

- Egress: Outgoing traffic from the pod. Use to selector to define outgoing traffic

- From and to Selectors

- PodSelector

- NamespaceSelector

- IpBlock

- Ports: specifies one or more port that will allow incoming/outgoing traffic

Services

Services provides a way to expose pods.

Whenever a client makes request to a service which routes traffic to its Pods in a load-balanced fashion

Endpoints: is the backend entities to which services route traffic. Each pod will have an endpoint associated with it

Service Types:

- ClusterIP: service exposes application inside the cluster network

- NodePort: expose application outside the cluster

- LoadBalancer: exposes application outside the cluster however it uses an external cloud load balancer. Works only on cloud platforms

apiVersion: v1 kind: Service metadata: name: svc-example spec: type: ClusterIP | NodePort selector: app: svc-pod ports: - protocol: TCP port: 80 targetPort: 80 nodePort: 30080 # kubectl get endpoints svc-exampleDiscovering K8s services with DNS

- Fully qualified name

service-name.namespace-name.svc.cluster-domain.exampledefault cluster domain name iscluster.local - Full qualified name is accessible from any namespace. From the same namespace we can directly use

service-name

- Fully qualified name

Ingress

- Object the manages external access to services in the cluster

- Can also provide more functionality like SSL termination, load balancing, name based virtual hosting

- Ingress objects don’t do anything by themselves, we need a Ingress Controller to work

- Ingress defines set of routing rules and each rule has a set of paths each with a backend

apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: my-ingress spec: rules: - http: paths: - path: /somepath pathType: Prefix backend: service: name: my-service port: number: 80- Ingress can also use named port

Storage

- Container file systems are ephemeral and thats why we need volumes to persist data longer. Data is stored outside the container

- Persistent Volume: they allow us to treat storage as an abstract resource and consume it using Pods

- Volumes

apiVersion: v1 kind: Pod metadata: name: vol-pod spec: containers: - name: nginx image: nginx:alpine volumeMounts: - name: my-vol mountPath: /output volumes: - name: my-vol hostPath: path: /dataTypes:

- HostPath: stores data on k8s node at specified path

- EmptyDir: stores data in a dynamically created location on the node. Only exists as long as the Pod. Will be removed once the Pod goes away

PersistentVolume object details set of attributes to describe underlying storage resource

StorageClass allows k8s admins to specific the type of storage services they offer on their platform

apiVersion: storaget.k8s.io/v1 kind: StorageClass metadata: name: localdisk provisioner: kubernetes.io/no-provisioner- allowVolumeExpansion property determines whether a storageclass allows expansion

- persistentVolumeReclaimPolicy determines how the storage resources can be reused

- Retain: keeps all the data

- Delete: deletes the underlying storage. Works with Cloud storage

- Recycle: Automatically deletes all data and the PV can be reused

PersistentVolumeClaim represents a users request for storage resources. It defines a set of attributes similar to those of PV

apiVersion: v1 kind: PersistentVolumeClaim metadata: name: pvc spec: storageClassName: localdisk accessModes: - ReadWriteOnce resources: requets: storage: 100MiapiVersion: v1 kind: Pod metadata: name: vol-pod spec: containers: - name: nginx image: nginx:alpine volumeMounts: - name: my-vol mountPath: /output volumes: - name: my-vol persistentVolumeClaim: claimName: pvc

Troubleshooting Kubernetes

- Kube API Server: If the API server is down, then you won’t be able to interact with the cluster. Make sure docker and kubelet services are up

- Node status: Use

kubectl get nodeto check nodes are running andkubectl describe node <node name>to check further details.- If a node problem, ssh into the Node.

systemctl status kubelet - Check if system pods are run

kubectl get pods -n kube-system

- If a node problem, ssh into the Node.

- Cluster and Node logs

- Check node logs using

sudo journalctl -u kubeletorsudo journalctl -u docker - Cluster component logs can be found in

/var/logunder files likekube-apiserver.logorkube-scheduler.log. These can be accessed viakubectl logsfor the kube-system pods

- Check node logs using

- Applications: check pod status using

kubectl get podorkubectl logs pod. - Container logs

- Contains everything written to stdout and stderr

kubectl logs <pod name> -c <container name>

- Networking issues

- Make sure the DNS and kube-proxy pods are running in the

kube-systemnamespace. - Netshoot: Run a container in the cluster

nicolaka/netshootto debug the network. It includes tools useful for troubleshooting the network/service

- Make sure the DNS and kube-proxy pods are running in the

Imperative Tasks

Change context

k config use-context acgk8sGet taints

k get node -o jsonpath='{.items[*].spec.taints}’Highest CPU usage

k -n web top pod -l app=auth --no-headers | head -n 1 | awk '{ print $1 }' > /k8s/0003/cpu-pod.txtScale deployment

k -n web scale deploy web-frontend --replicas=5Expose deployment

k -n web expose deploy web-frontend --port=80 --dry-run=client -o yaml > 1.yamlCreate ServiceArea

k -n web create sa webautomationCreate ClusterRole

kubectl -n web create clusterrole pod-reader --verb=get,watch,list --resource=podsCreate ClusterRoleBinding

k -n web create clusterrolebinding cb --clusterrole=pod-reader --serviceaccount=web:webautomationDrain node

kubectl drain acgk8s-worker1 --ignore-daemonsets --force --delete-emptydir-dataLabel namespace

kubectl label namespace user-backend app=user-backendBackup ETCD

ETCDCTL_API=3 etcdctl \ --endpoints=https://etcd1:2379 \ --cacert=etcd-certs/etcd-ca.pem \ --cert=etcd ver.crt \ --key=etcd-certs/etcd-server.key \ snapshot save /home/cloud_user/etcd_backup.dbRestore ETCD

sudo ETCDCTL_API=3 etcdctl snapshot restore /home/cloud_user/etcd_backup.db \ --initial-cluster etcd-restore=https://etcd1:2380 \ --initial-advertise-peer-urls https://etcd1:2380 \ --name etcd-restore \ --data-dir /var/lib/etcdUpgrade cluster components

# ControlPlane sudo apt-get update && sudo apt-get install -y --allow-change-held-packages kubeadm=1.22.2-00 kubectl drain controlplane --ignore-daemonsets sudo kubeadm upgrade plan v1.22.2 sudo kubeadm upgrade apply v1.22.2 sudo apt-get install -y --allow-change-held-packages kubelet=1.22.2-00 kubectl=1.22.2-00 sudo systemctl daemon-reload sudo systemctl restart kubelet kubectl uncordon acgk8s-control # Worker kubectl drain worker --ignore-daemonset --force ssh worker sudo apt-get update && sudo apt-get install -y --allow-change-held-packages kubeadm=1.22.2-00 sudo kubeadm upgrade node sudo apt-get install -y --allow-change-held-packages kubelet=1.22.2-00 kubectl=1.22.2-00 sudo systemctl daemon-reload sudo systemctl restart kubelet kubectl uncordon worker

Yaml snippets

// Ingress mapping to svc apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: web-frontend-ingress namespace: web annotations: nginx.ingress.kubernetes.io/rewrite-target: / spec: ingressClassName: nginx-example rules: - http: paths: - path: / pathType: Prefix backend: service: name: web-frontend-svc port: number: 80# Storage class to allow PVC expansion apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: localdisk provisioner: kubernetes.io/no-provisioner allowVolumeExpansion: true# Network policy to allow all pods to communicate with each other in a namespace over a specific PORT apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: netpol-port namespace: default spec: podSelector: {} # all pods policyTypes: - Ingress - Egress ingress: - from: namespaceSelector: matchLabels: app: user-backend ports: - protocol: TCP port: 80 egress: - to: ports: - protocol: TCP port: 5978 # Network policy to block all communication to a pod in the namespace named kubectl -n foo get pod maintenance --show-labels # use the labels apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: netpol-port namespace: foo spec: podSelector: {} # all pods policyTypes: - Ingress - Egress ingress: - from: - podSelector: matchLabels: app: maintenance egress: - to: - podSelector: matchLabels: app: maintenance# MultiContainer Pod apiVersion: v1 kind: Pod metadata: labels: run: multi name: multi namespace: baz spec: containers: - image: nginx name: nginx - image: redis name: redis # Sidecar container in a Pod apiVersion: v1 kind: Pod metadata: labels: run: logging-sidecar name: logging-sidecar namespace: baz spec: volumes: - name: vol emptyDir: {} initContainers: - name: init # we use the initContainer to make sure the file is present image: busybox args: - sh - -c - touch /output/output.log volumeMounts: - name: vol mountPath: /output containers: - name: sidecar image: busybox args: - sh - -c - while true; do cat /output/output.log; sleep 5; done volumeMounts: - name: vol mountPath: /output - args: - sh - -c - while true; do echo Logging data > /output/output.log; sleep 5; done image: busybox name: logging-sidecar volumeMounts: - name: vol mountPath: /output# Debug broken nodes (when kubelet is down in a Node) kubectl get nodes -o wide # check for not ready status kubectl describe node NOT_READY_NODE ssh NOT_READY_NODE sudo journalctl -u kubelet sudo systemctl status kubelet sudo systemctl start kubelet